For most of us it started when our iRobot Roomba learned how to navigate the living room. We just turned it on and left for the grocery store, by the time we got back it had cleaned the floor and put itself back into the docking station to be charged. In 2004 that was pretty impressive. So impressive that sometimes we’d just sit down and watch it bump into things until it learned where it had been and how to take the most efficient path to cleaning the entire floor.

Technology has come a long way since then and although it may seem inconceivable, it won’t be too long before your car takes you to work while you sit back and enjoy the ride. But while you’re relaxing on the road your car will be working with the cloud to make sure it keeps you safe, and unlike the Roomba it won’t bump into things to learn. To do this it will use hundreds of onboard sensors, artificial intelligence, machine learning, and a dizzying amount of compute power and off-board cloud services to make the best decisions on your behalf. And in addition to what it can “see” the vehicle will also need information about what is out of range of its sensors.

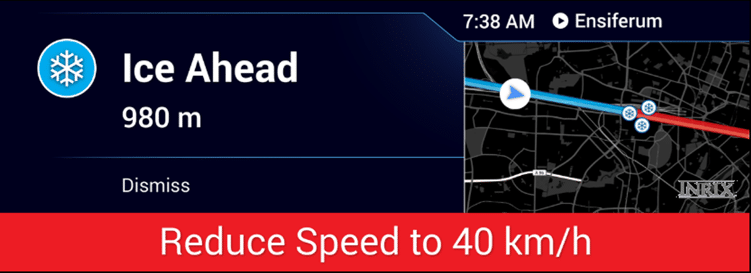

There is an enormous amount of money being spent in the 3D or HD mapping space where intersections are being mapped to centimeter resolution, and where cameras, sonar, radar, and LiDAR (a portmanteau of light and radar) are being used to differentiate pedestrians on the sidewalk from pedestrians in the roadway and thousands of other critical identifications. In addition to the navigational maps and close-proximity detection technologies autonomous vehicle functions will also be continuously cloud connected so that they can consider exception-based events happening ahead of them. These events, or incidents, will come from either known datasets, such as a planned construction event that closes two of four lanes three kilometers ahead, known collisions, or increasingly from shared data from other vehicles that travelled the same path a few minutes earlier. Similar to pooled GPS data used today to perform traffic aware routing functions, incident detection and transmission will serve a critical role in the safety of autonomous vehicles. Vehicle-to-cloud networks will learn where potholes are, know the locations and times of the day where there is increased pedestrian traffic, know about crashes and airbag deployments in real-time, and know where patches of ice exist on the road– and they’ll share it to the cloud to be used by other vehicles in near real time – fleets of cars will learn from each other.

The first wave of semi-autonomous and ADAS vehicles are already here, and fully autonomous vehicles are less than two years away. INRIX is building the backend cloud services to aggregate, process, de-duplicate and normalize the exception-based incident data that is generated from the millions of onboard sensors that will be deployed in this space and combine it with other datasets to fully inform both vehicles and people about exception-based events on the road. It is estimated that each fully autonomous vehicle could generate up to 4 terabytes of data per day. That’s a lot of information, and being able to collect, learn from, and share it with other connected vehicles will be the key to making the roads safer and more efficient.

Of course this type of technology shift does not happen all at once and there will be years of transition where human drivers will need proactive awareness of what lies ahead long before communication is purely machine-to-machine. Whether it’s a crash 2 kilometers ahead that has caused a sudden stop in interstate traffic, or a weather event that suggests decreased speeds would be prudent, there is a need for a complete dataset that represents exceptions to normal traffic flow, or warnings specific to safety measures that should be taken. INRIX is currently consuming real-time vehicle sensor data, as well as investing in the aggregation and publication of incidents that could impede travel from many other sources.

As more sensors are placed in vehicles there will naturally be more opportunity to enhance the overall travel experience. Over time vehicle sensor data, which from a driver’s standpoint is actionless, is likely to replace human input crowd data relating to what’s happening on the roads. Cloud providers who are able to consume, filter, interpret and make sense of the vehicle data will be best positioned to add value to the connected vehicle ecosystem. Computational horsepower and low latency transmission will be critical to informing transportation and a purpose-built platform will be needed to maximally benefit both vehicle and occupant.

The future of travel is connected, and INRIX has built the services needed to support a connected future where vehicle-to-cloud-to-multi-vehicle will enhance safety and improve the operational efficiency of the road network.

By Jeff Summerson Director, Product Management